I have a recurring vision of humanity drowning in information; information that's flooding us faster than we can possibly ingest, let alone vet for reliability or truth. Even before Generative AI, more content was being created than any person could ever hope to consume.

It's only getting faster, wilder, and more treacherous.

All content required at least one person's time and effort to create it. Human authorship was the bottleneck. Now, we've got a growing library of work that's produced with fleeting or merely superficial human guidance, and only the digitized, vectorized, echoes of wisdom. And it's often impossible to tell which is which.

What does this mean for how we learn and grow as people? How do we, a civilization built on oral tradition... taught through story and text... how can we hope to know and trust anything going forward?

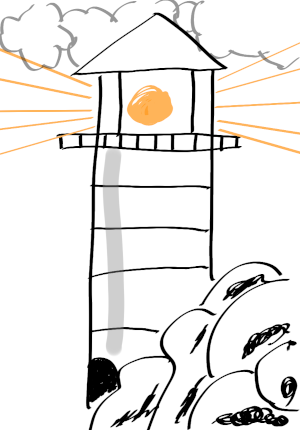

It seems to me that now, more than ever, we need to re-ground ourselves in how we know things. We need to establish a light to guide our way through the rising flood of synthetic content.

As we continue to use Generative AI, we must pay more attention to epistemology: the true nature of knowledge and how we come to know things.

In An Essay Concerning Human Understanding by John Locke, it is suggested that the mind at birth is a blank slate, and knowledge is written on it through our experiences. With the dawn of Generative AI and the way it significantly lowers the bar to entry for creative acts (which is undoubtedly a good thing!), we face some new risks that seem similar to the advent of industrial automation, but subtly and significantly different. This doesn't just change how fast or easily we can do things. It changes how we think and develop our craft. All of our crafts.

Using Large Language Models (LLMs) like ChatGPT, Claude, and Gemini is a powerful way to explore our ideas and sharpen our thoughts. I'm using ChatGPT right now as I write this, and it would be tantalizingly easy to let it write for me. I hold back, though, because of my concern that if I let it create my words (even in my own voice, and it is scarily good at mimicry!), I wouldn’t be living up to my deep value of authenticity. Even more concerning is the very real risk that I'd erode my ability to organize my thoughts and write effectively on my own.

There are 3 key questions that I'd like to explore in depth:

- How do we identify and classify good content from bad content?

- How do we prevent the erosion of critical thinking, creativity, and innovation as people inevitably offload hard thought and research onto AI?

- What happens when AI-generated synthetic slop contaminates the training data?

This is a pivotal moment in human creative history. We need to have a better philosophical understanding of when and how to use AI constructively, and when overreliance on it may be irresponsible with far-reaching consequences. What happens to the concept of authenticity as the tide of synthetic content washes over us?

Stick with me. We'll find our way together. I'm sure that we can keep the light on.